Ansible User Management, Managing system users using ansible

by Anish

Posted on Friday June 29

Introduction

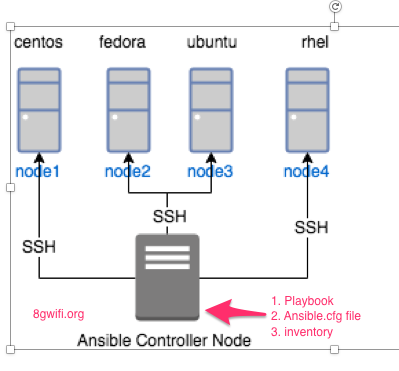

In this section we will learn how to manage users using Ansible in cloud environment , we will start by defining the architecture, as shown in this diagram, Ansible controller node is managing various nodes using SSH protocol, extending this diagram we are going to create playbooks which will manage different users with their sudoers privilege in the target node

A quick recap of creating and setting up ansible user in controller and target node

Ansible User Setup in Controller Node

Create ansible remote user to manage the installation from Ansible Controller node. This user should have appropriate sudo privileges. An example sudoers entry is given below

Add user ansible

[root@controller-node] adduser ansibleSwitch to ansible User

[root@controller-node] su - ansibleGenerate a strong SSH-keyPair for ansible user

[ansible@controller-node]ssh-keygen -t rsa -b 4096 -C "ansible"copy the id_rsa.pub file, to the target node Ansible /home/ansible.ssh/home directory

[ansible@controller-node]cd /home/ansible/.ssh/[ansible@controller-node .ssh]$ ls

id_rsa id_rsa.pubNote down the public Key (id_rsa.pub) and copy it over in the Other machines

[ansible@controller-node .ssh]$ cat id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAgEApeDUYGwaMfHd7/Zo0nzHA69uF/f99BYktwp82qA8+osph1LdJ/NpDIxcx3yMzWJHK0eg2yapHyeMpKuRlzxHHnmc99lO4tHrgpoSoyFF0ZGzDqgtj8IHS8/6bz4t5qcs0aphyBJK5qUYPhUqAL2Sojn+jLnLlLvLFwnv5CwSYeHYzLPHU7VWJgkoahyAlkdQm2XsFpa+ZpWEWTiSL5nrJh5aA3bgGHGJU2iVDxj2vfgPHQWQTiNrxbaSfZxdfYQx/VxIERZvc5vkfycBHVwanFD4vMn728ht8cE4VjVrGyTVznzrM7XC2iMsQkvmeYTIO2q2u/8x4dS/hBkBdVG/fjgqb78EpEUP11eKYM4JFCK7B0/zNaS56KFUPksZaSofokonFeGilr8wxLmpT2X1Ub9VwbZV/ppb2LoCkgG6RnDZCf7pUA+CjOYYV4RWXO6SaV12lSxrg7kvVYXHOMHQuAp8ZHjejh2/4Q4jNnweciuG3EkLOTiECBB0HgMSeKO4RMzFioMwavlyn5q7z4S82d/yRzsOS39qwkaEPRHTCg9F6pbZAAVCvGXP4nlyrqk26KG7S3Eoz3LZjcyt9xqGLzt2frvd+jLMpgvnlXTFgGA1ITExOHRb+FirmQZgnoiFbvpeIFj65d0vRIuY6VneIJ6EFcLGPpzeus0yLoDN1v8= ansible

[ansible@controller-node $ exit

[root@controller-node $ exitUpdate the /etc/sudoers.d and add the ansible user to manage controller node itself

[root@controller-node $ visudo

#includedir /etc/sudoers.d

ansible ALL=(ALL) NOPASSWD: ALLAnsible User Setup in Target Node

Create user ansible and create a file named authorized_keys in the .ssh directory and change its file permissions to 600 (only the owner can read or write to the file).

[root@nod1]sudo adduser ansible

[root@node1]sudo su - ansible

[ansible ~]$ cd /home/ansible

[ansible ~]$ mkdir .ssh

[ansible ~]$ touch authorized_keys

[ansible ~]$ chmod 600 authorized_keys

Copy the SSH public key (id_rsa.pub) from the ansible controller node and add it to all the VM which is managed by controller node

[ansible@localhost ~]$ ssh-copy-id ansible@node3

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system.Managing System Users Operations Perspective

Managing user in the cloud environment like cloud is security as well as Infrastructure requirement, In general we deal with these set of users

- sudo users

- non sudoers users

- nologin users (System users)

For security hardening the servers running on cloud should only accept password less logins, through change management process like (git) all the users have submitted their public key and they are ready to deployed in respective nodes through Ansible controller

Managing System User Using Ansible

In the first section we will add the users , in the next section we will learn how to delete the users

Adding System Users using Ansible user module

1. Create the directory structure in the controller which should looks like this

[ansible@controller]$ tree

-- ssh

+ files

+ tasks

+ -- main.yml

+ vars

Common Definition

- ssh : is the Role for ansible-playbook

- files: In this directory place all the user public key

- tasks: The main.yaml file will get executed when this role is run

- vars: In this directory the user definition will store

2. create the file users.yml and add the list of user which needs sudo aceess and which doesn't need sudo access

[ansible@controller]$ tree

.

-- ssh

-- files

-- tasks

+ -- main.yml

-- vars

-- users.yml

[ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user2

use_sudo: yes

- username: user4

use_sudo: no

- username: user6

use_sudo: no

3. Now submit public key of users example (user2/user4&user6) in the files directory , the files directory should looks like this

[ansible@controller]$ tree

.

-- ssh

-- files

+ --- user2.pub

+ --- user4.pub

+ --- user6.pub

-- tasks

+ --- main.yml

-- vars

--- users.yml

4 directories, 5 files

4. Next task is define the playbook run under the tasks directory edit the file main.yml add the following definition

[ansible@ ~]$ cat ssh/tasks/main.yml

---

- include_vars: users.yml

- name: Create users with home directory

user: name={{ item.username }} shell=/bin/bash createhome=yes comment='Created by Ansible'

with_items: '{{users}}'

- name: Setup | authorized key upload

authorized_key: user={{ item.username }}

key="{{ lookup('file', 'files/{{ item.username }}.pub') }}"

when: '{{ item.use_sudo }} == True'

with_items: '{{users}}'

- name: Sudoers | update sudoers file and validate

lineinfile: "dest=/etc/sudoers

insertafter=EOF

line='{{ item.username }} ALL=(ALL) NOPASSWD: ALL'

regexp='^{{ item.username }} .*'

state=present"

when: '{{ item.use_sudo }} == True'

with_items: '{{users}}'

Let's break down the code

Run the playbook

To run this playbook make sure we have the ansible inventory file is setup , Ansible inventory file is group of servers, for this example I have create a inventory file name hosts and added all the nodes to it, which I need to managed

[ansible@ ~]$ tree

+ hosts

+ ssh

-- files

+ --- user2.pub

+ --- user4.pub

+ --- user6.pub

+ tasks

+ --- main.yml

+ vars

--- users.yml

4 directories, 6 files

[ansible@~]$ cat hosts

[all]

node1

node2

node3

[ansible@localhost ansible]$

Next we will create ssh.yml to tell ansible-playbook use role ssh,

[ansible@~]$ cat ssh.yml

# To Run this Playbook Issue the command

#Author Anish Nath

# ansible-playbook ssh.yml

---

- hosts: all

become: yes

gather_facts: yes

roles:

- { role: ssh }

Finally run the playbook using the inventory hosts

[ansible@controller]$ ansible-playbook ssh.yml -i hosts Snippptes of the code output

ok: [node3] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node3] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node3] => (item={u'username': u'user6', u'use_sudo': False})

ok: [node1] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node1] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node1] => (item={u'username': u'user6', u'use_sudo': False})

ok: [node2] => (item={u'username': u'user2', u'use_sudo': True})

skipping: [node2] => (item={u'username': u'user4', u'use_sudo': False})

skipping: [node2] => (item={u'username': u'user6', u'use_sudo': False})

PLAY RECAP *************************************************************************************************************************************

node1 : ok=5 changed=1 unreachable=0 failed=0

node2 : ok=5 changed=1 unreachable=0 failed=0

node3 : ok=5 changed=1 unreachable=0 failed=0

Removing System Users using Ansible

In the cloud cloud environment, user has a lifecycle, if the user is no longer required to be present in the system the user must be deleted, and this should happen proactivaley, for an example "user2" user needs to deleted, then from the change management process, users.yml files needs to edited to remove the entry of user2

Before

[ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user2

use_sudo: yes

- username: user4

use_sudo: no

- username: user6

use_sudo: no

After Deleting

[ansible@controller]$ cat ssh/vars/users.yml

[ansible@controller]$ cat ssh/vars/users.yml

---

users:

- username: user4

use_sudo: no

- username: user6

use_sudo: no

Now this user needs to be delete across the cloud environment which is managed by Ansible controller, to do this create a file deleteusers.yml in the vars directory and maintain a set of users which needs to be removed from the target node

[ansible@controller ~]$ cat ssh/vars/deleteusers.yml

---

users:

- username: user2

- username: user3

- username: user5

Next update the main.yml which is present in tasks and add the delete instructions using

- include_vars: deleteusers.yml

- name: Deleting The users

user: name={{ item.username }} state=absent remove=yes

with_items: '{{users}}'

Finally run the playbook using the inventory hosts

[ansible@controller ~]$ ansible-playbook ssh.yml -i hostsNotice : the delete tasks executed by this playbook run and removed user2 from ansible managed nodes

TASK [ssh : Deleting The users] ****************************************************************************************************************

changed: [node2] => (item={u'username': u'user2'})

changed: [node1] => (item={u'username': u'user2'})

changed: [node3] => (item={u'username': u'user2'})Thanku for reading !!! Give a Share for Support

Your Support Matters!

Instead of directly asking for donations, I'm thrilled to offer you all nine of my books for just $9 on leanpub By grabbing this bundle you not only help cover my coffee, beer, and Amazon bills but also play a crucial role in advancing and refining this project. Your contribution is indispensable, and I'm genuinely grateful for your involvement in this journey!

Any private key value that you enter or we generate is not stored on this site, this tool is provided via an HTTPS URL to ensure that private keys cannot be stolen, for extra security run this software on your network, no cloud dependency

Ansible Related Topics

Kubernetes Related Topics

Applied Cryptography Topics

Web Crypto API Topics

Linux Related Topics

Openstack Articles

python Cryptography Topics

PHP Cryptography Topics

Topics

For Coffee/ Beer/ Amazon Bill and further development of the project Support by Purchasing, The Modern Cryptography CookBook for Just $9 Coupon Price

Kubernetes for DevOps

Hello Dockerfile